This is something I tried before but didn’t complete for some reason. Now that I’m on vacation this week and I’ve got some extra time to play, I went back to finish it.

As you probably know by now, Windows 7 has the ability to boot into a .vhd file. This is awesome, as you can create a virtual testing environment that you can run directly against you hardware.

There are a few gotchas, though. You’re limited in the OS’s you can run on the virtual side to Windows 7 and Windows 2008. I’ve seen post of people getting other OS’s to run but I haven’t tried. And I’ve seen warnings not to do this on a laptop, though I’ll try it, once I install my new bigger laptop hard drive next week.

Anyway, this is how I did it. I had created a Windows 7 virtual machine in Virtual PC 2007. I used the vhd from that VM instead of creating a new one, though you certainly could if you wanted to.

The first thing I did was to run sysprep inside my VM. I’m not an expert on sysprep, I just followed instructions I found on the web. Briefly, sysprep is a GUI tool that prepares the image to be configured to use the hardware on the new server. You’ll find it in C:\Windows\system32\sysprep. Run it as an admin. Choose the default target of out of the box experience and also choose to generalize. Also choose to shut down. After sysprep is finished it will power down your VM.

I didn’t do this, but it’s probably a good idea to make a copy of the vhd at this point.

The next thing I did was to set up Windows bootloader to see the vhd file. I opened a admin command prompt and ran the following: bcdedit /copy {current} /d “Win 7 VHD”. This returns a GUID I saved to notepad. “Win 7 VHD” is the description I wanted to see on the boot menu. After that I ran these three commands:

bcdedit /set {guid} device vhd=[C:]\VM\Win7\Win7.vhd

bcdedit /set {guid} osdevice vhd=[C:]\VM\Win7\Win7.vhd

bcdedit /set {guid} detecthal on

In my example I replaced guid with the guid I saved earlier. VM\Win7\ is the path to my vhd file, and Win7.vhd is the file I’m using. Note that the drive letter is in square brackets: [C].

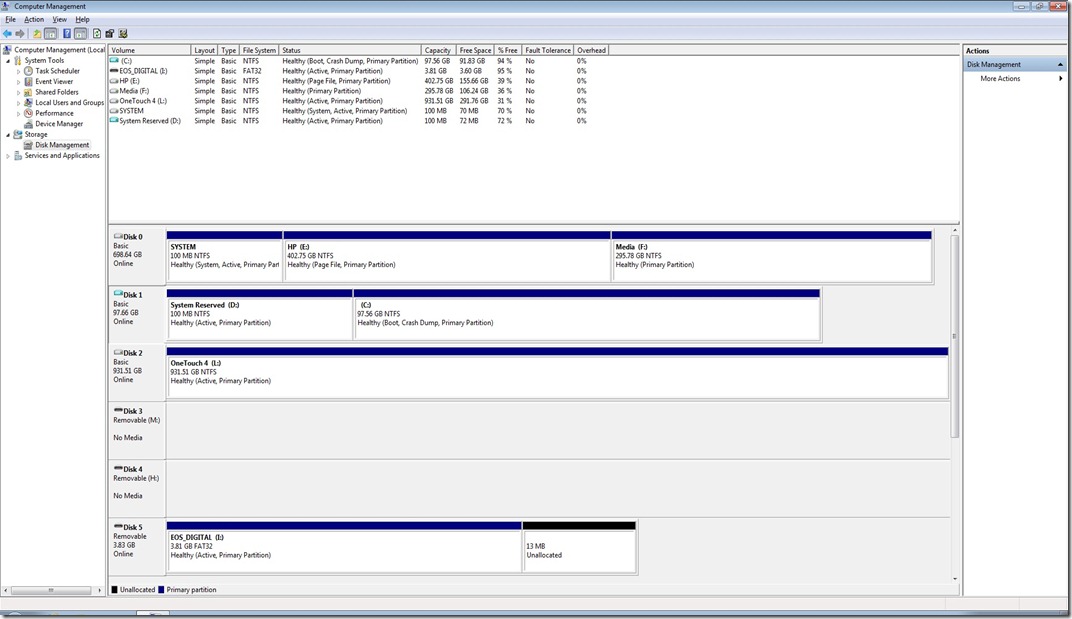

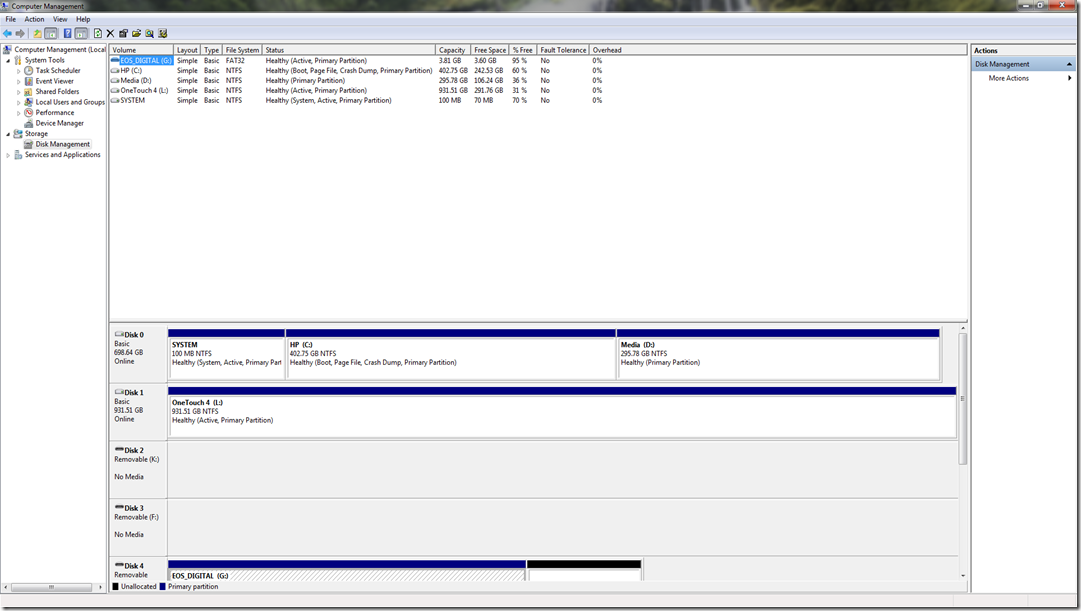

And that’s just about it. Once I restarted my computer I could see both my original Windows 7 installation and my new vhd boot option. When I chose the vhd, Windows started and applied the hardware changes. After that I just logged in and ran my Windows vhd. Once in the virtual environment, I can see all the drives on the computer, including those for my “real” Windows 7. Notice Disk 1 is a blue icon; this shows that it’s a vhd file. It also shows the reserved system partition. I can also see the files on the other physical drives.

I don’t get this if I’m running my physical Windows 7. I can mount the vhd file (on the menu go to Action > Attach VHD). But it doesn’t stay mounted between reboots. I haven’t tried mounting it with DISKPART yet, I’ll try that when I create my laptop VM.

The only drawback is the vhd is not portable, and I can’t run it in Virtual PC 2007 anymore. I can probably run sysprep again to get it back, but I think I’ll keep it as it is for now.