Friday, December 31, 2010

Moving to new home

Thursday, December 16, 2010

SQL and Relational Theory Master Class by C.J. Date

Monday, October 4, 2010

What I learned today: No backup or restore with the DAC

For everything I teach, I learn something in return.

I began to train a new junior level DBA today. He’s someone that works with us already as a developer but he’s shown an interest in learning how to be a DBA so I’m beginning to teach him what I know. To start off we began by discussing his daily tasks, then we started to talk about backup and recovery, topics I gave him as an assignment last week. For this week’s homework I told him to read up on the Dedicated Administrator Connection (DAC) and to get familiar with SQLCMD. I mentioned how the DAC was useful in situations where the server was under a strain and you need a way to connect to troubleshoot the problem. Then I said you can use it for backups and recoveries.

I should have quit when I was ahead.

As my new colleague correctly pointed out, you can’t use the DAC for backups or recovery. If you try, you’ll get the following error:

Msg 3637, Level 16, State 3, Line 1

A parallel operation cannot be started from a DAC connection.

Msg 3013, Level 16, State 1, Line 1

BACKUP DATABASE is terminating abnormally.

And BOL can’t make it any clearer:

SQL Server prohibits running parallel queries or commands with the DAC. For example, error 3637 is generated if you execute either of the following statements with the DAC:

- RESTORE

- BACKUP

(Ref: http://technet.microsoft.com/en-us/library/ms189595.aspx)

For some reason I’d forgotten that. It was something that I had read a long time ago and forgot since I don’t use the DAC very often, never in production other than to confirm that it was set up through the firewall. I had assumed that backing up a database in an emergency would be one of the reasons I’d use the DAC. Luckily I was reminded before a situation arose where I relied on it.

So I actually learned two things today. I was reminded about the restriction of the DAC, and I learned to be more careful about what I tell others when it comes to SQL, not to assume facts and to do my own homework. I hope I remember both lessons equally.

Thursday, September 30, 2010

Using the IGNORE_DUP_KEY index option

First, create a small sample table. I used tempdb but feel free to use whatever test database you want. Then create a unique clustered index on the field1 column.

CREATE TABLE Table1 ( field1 int NOT NULL, field2 char(2) NOT NULL ) GO CREATE UNIQUE CLUSTERED INDEX cl_Table_1 ON Table1 ( field1 ASC ) GO

Next insert a few records into the table. I’m inserting records in two separate blocks to trap the intentional duplicate key error.

BEGIN TRY INSERT INTO Table1 VALUES(1, 'aa') INSERT INTO Table1 VALUES(2, 'ab') INSERT INTO Table1 VALUES(3, 'bb') END TRY BEGIN CATCH SELECT ERROR_MESSAGE () END CATCH BEGIN TRY INSERT INTO Table1 VALUES(4, 'cc') INSERT INTO Table1 VALUES(3, 'ac') INSERT INTO Table1 VALUES(5, 'dd') END TRY BEGIN CATCH SELECT ERROR_MESSAGE () END CATCH SELECT field1, field2 FROM Table1You won’t get any errors in the first block because all three values are unique as defined by the key. The second block will generate the duplicate key error when it tries to insert the value 3 into field1. At this point the error is trapped and value 5 doesn’t get inserted. Viewing the values shows the 4 records.

| field1 | fiels2 |

| 1 | aa |

| 2 | ab |

| 3 | bb |

| 4 | cc |

Now lets alter the index to ignore duplicate key errors. When you use this option any error is ignored and execution of the batch continues. Only the duplicate record is not inserted. We’ll insert three more records and again trap any errors.

ALTER INDEX cl_Table_1 ON Table1 SET ( IGNORE_DUP_KEY = ON ); GO BEGIN TRY INSERT INTO Table1 VALUES(6, 'zz') INSERT INTO Table1 VALUES(2, 'dd') INSERT INTO Table1 VALUES(7, 'cc') END TRY BEGIN CATCH SELECT ERROR_MESSAGE () END CATCHThis time no error is generated. The duplicate value of 2 is ignored and execution continues, meaning that the next record of 7 is also inserted. Viewing the values shows this.

| field1 | field2 |

| 1 | aa |

| 2 | ab |

| 3 | bb |

| 4 | cc |

| 6 | zz |

| 7 | cc |

Saturday, August 21, 2010

Does your company owe you training?

Recently I read an interesting post by Steve Jones (Blog | Twitter) on SQL Central about Personal Investments, how you invest in your career. It’s also something I’ve been thinking about lately. I agree with Steve that I’m responsible for my own education. But I feel that companies should be, to some extent, as well.

I fall into the “accidental dba” category. When a senior member of our team left I was assigned to work on maintaining and monitoring our databases, something no-one really did before that. It fell to me because I had the most knowledge about SQL Server, as opposed to just writing T-SQL statements. I learned what I could by going to all the free events I could find. I joined my local SQL User Group and attended as many meetings as possible. I bought and read many excellent SQL books and blogs. I took any free or cheap training resource that was available. Eventually I passed a number of Microsoft certification exams. I’m proud of what I’ve achieved on my own, and I will continue to further my technical education.

There’s a cost to my training, even if it’s free. There’s the hours of attending conferences and viewing webcasts. There’s the price of purchasing books, and the time it takes to read them. There’s even travel related costs, such as attending a SQL Saturday event in another state. I pay the cost gladly, and I’ve already seen the benefits.

But at what point should a company start providing training?

Now I don’t believe your company should always pay for your education. As Steve said in his post, you should make your own investment in your career, showing your managers the value of your training. You’re the one responsible for keeping your skills up to date. But it should be a partnership. Your company also needs to make an investment. They have as much to gain as you do.

This isn’t just to motivate an employee, giving you a nice job perk. Frankly, if you’re not self-motivated to get the basic skills needed to do your job maybe you should start looking for another position. But if your job suddenly requires specialized knowledge they should help you attain that knowledge.

Let’s say, for instance, that your company has decided to use clustering for high availability. And since you’re the SQL ‘expert” they’ve come to you to set it up. You’ve read up on clustering, and you know in theory how it works. But you’ve never worked in a clustering environment, let alone design the architecture involved. Shouldn’t your company help you learn what you need to know to succeed? After all, they have more at stake in this case than you do.

I just feel that companies should also contribute to your technical education. I’m interested in how other’s feel about it.

Monday, August 16, 2010

What I learned today – CHECKDB and database snapshots

A few days ago I was sitting in my local coffee shop, getting a little light reading done. At work we’re about to move from the Standard Edition of SQL 2005 to the Enterprise Edition and I wanted to learn more about some features of database snapshots. Snapshots are basically just a read-only copy of a database taken at a specific time. Anyway I was reading Chapter 3 – Database and Database Files in the excellent book Microsoft SQL Server 2008 Internals. The discussion turned to space allocated for the snapshots. That’s when I read that DBCC CHECKDB actually runs against a snapshot of the database.

That caught me by surprise. Until then I thought that the CHECKDB ran against the database itself.

Now I thought I was doing OK with protecting our databases. I faithfully run CHECKDB weekly against all databases and check for any errors. I set the CHECKSUM option and verify backups. I occasionally restore databases on a standby server to make sure there’s no problems with the backups. And I monitor database and drive space pretty closely.

But I was flying blind. I have no idea how near I came to having CHECKDB fail because of a lack of space. So I started digging a little deeper into CHECKDB. And I was able to learn a little bit more of how CHECKDB works with snapshots.

For anything concerning CHECKDB, your first resource should be Paul Randal (Blog | Twitter). Read his excellent series on CHECKDB From Every Angle to get a good understanding of what’s going on. And he also wrote Chapter 11 – DBCC Internals in Microsoft SQL Server 2008 Internals, a great book you should read anyway.

CHECKDB needs a consistent view of the database to work with. In SQL 7 it acquired table locks. In SQL 2000 it analyzed the transaction log. Beginning with SQL 2005 and going forward it creates a hidden snapshot on the same volume as the database – you have no control over where it’s placed. If you’re running CHECKDB at the same time that your server has a heavy workload the snapshot can run out of space and you’ll get an error showing that CHECKDB didn’t complete. If that happens you have a few options;

- Create your own database snapshot on a drive that has enough space and run CHECKDB against that snapshot. CHECKDB will know that its running against a snapshot and won’t create another one.

- Start the SQL service in single user mode.

- Put the database in read-only mode.

Once CHECKDB has finished it will delete the snapshot if it created one.

But there are a few gotcha’s with CHECKDB creating the snapshot. First, it has to be created on an NTFS drive. Second, you can’t use the WITH TABLOCK hint. And third, you can’t run against tempdb; but why would you want to?.

I’m sure I’m over simplifying. I still need to finish Chapter 11.

One other thing I’m wondering. If CHECKDB can create a snapshot database in SQL 2005 regardless of the edition, can the Standard Edition create one with a hidden flag?

Monday, August 9, 2010

Why I bought an iPad

Disclaimer

I bought my new iPad a week ago. I thought I’d write a post explaining why I bought one, and kind of giving it a review against other eReaders I’ve used. But I noticed that Steve Jones wrote something similar at SQL Musings a few days ago. I had almost finished my post when I read it, and I decided to go ahead and finish it with this disclaimer. But please read Steve’s post, he raises some excellent points.

Background

I read. A lot. Always have. And since I have a long daily commute I have a lot of time in which to read. My tastes range from light summer reading, books that are perfect for taking to the beach or on vacation, to heavily technical training manuals.

My problem is what to carry with me. I usually use my morning commute to study or to read the news. The afternoons are most likely spent doing research. Nights and weekends are for reading the latest novel. For instance I’m currently studying for the SQL 2008 Business Intelligence certifications so I’m going through the MS Press Training Kit for 70-448. But I’m also reading Carl Hiassen’s latest novel Star Island. And I’ve got James Lee Burke’s The Glass Rainbow on tap.

Older Devices

I’ve been reading eBooks for a while now, on any number of devices. I started by reading pdf files on my desktop computer. Then came Microsoft Reader and their .lit files. Of course Word documents and text files were included.

But the problem with using a desktop computer was that it wasn’t portable. Once I got an IT job I could justify buying a laptop computer. Even more handy were PDA devices, precursors to the smart phones of today. Each of these devices also had issues. Laptops, at least the ones I had years ago, were bulky, took a while to boot up, and generally didn’t have a lot of extra storage. And PDA screens were too small for my liking. If I made the font large enough to read comfortably then I was turning pages constantly.

My devices now

Enter the Amazon Kindle. I know that the Sony Reader came first, but it never really tempted me. I thought the price was too high and the book selection was too small. I thought the same of the Kindle 1 when it first came out. It wasn’t until the Kindle 2 was released, with a growing number of eBooks and a cheaper price, that I got behind it.

I also had a different laptop now, an HP Pavilion TX2000 that’s a Tablet PC with a swivel screen that can run as a slate or a classic laptop. This 3 year old PC has 4 GB of RAM and a 500 GB hard drive so it can run pretty much anything I want.

And as you can tell from the title of this post, I just bought an Apple iPad. I’ve got the 16 GB WiFi model, more than enough storage for my pdfs and other documents.

I should also say I’ve ruled out the Nook from Barnes and Noble as too much like the Kindle. I know it can share books, but I don’t know anyone else with a Nook and I don’t think the eBook selection is as good as Amazon.

Kindle pros and cons

I’ve been using the Kindle 2 now since it came out and I love most of it. It’s light, easy to hold in one hand while reading. It has a large enough storage capacity of 1.5 GB, free 3G Whispernet connectivity and direct connect to Amazon or other sites to download a book in 60 seconds. You can adjust the font and the number of words per line, and the readability on the Kindle is the best of my three main devices. The battery life is 2 weeks with 3G off, 4 days with it on. And you can read in direct sunlight, so I can read just about anywhere. There’s no back light, but I have a clip-on for the few times I read in bed. The last Kindle upgrade added collections, a way of grouping my books by category.

My only regret about the Kindle is that it’s not the best device to read technical books or pdfs on. Originally, I could email a pdf to a special Kindle account and it would convert it to a Kindle-formatted ebook. Later there was an update that allows me to copy a pdf directly to the Kindle. And there’s always been 3rd party tools like MobiReader and Calibre that could convert pdfs to a format that Kindles can read. However big pdfs are a problem, and converted pdfs never really convert cleanly. The 2.5 update allows zooming into pdfs and you can rotate the screen, but there’s still an uncomfortable amount of scrolling and page turning that makes reading pdfs on the Kindle very difficult, at least for me.

Tablet PC pros and cons

I love my tablet pc. It fits my needs as a laptop computer, allowing me to run every application I want, plus it’s Wacom and touch enabled so I can take handwritten notes. Amazon and other eBook manufacturers have released a Kindle app for pcs so I can sync my Kindle books between devices. I’ve got built-in WiFi and a Sprint 3G data card so I can connect to the internet from just about anywhere. Using Adobe Reader allows me to size my pdfs to any size I need. And when I’m studying its great to have the ability to work directly in another application to try out some of the things I’m reading about in my current pdf.

But the tablet pc is the most expensive device. It’s also has the least amount of battery life, though I have more than enough for my daily commutes. It takes the longest to start up either cold or warm. It’s the bulkiest by far. And the screen is unreadable in sunlight.

iPad pros and cons

I fought getting an iPad for a while, I really did. I thought for the most part it would just duplicate what i could already do on my tablet pc. But it does have some distinct advantages. First, it’s much lighter. Not as light as my Kindle but pounds less that my tablet. It starts up almost immediately. The larger screen makes the iPad ideal for my technical documents. I can browse the web and read emails through the WiFi connection. And like my tablet, there’s a Kindle app so I can sync up my Amazon books.

Again, like the tablet, I can’t read the screen outdoors. It’s far more expensive than the Kindle ($499 vs $139 for the lowest priced models) but not as expensive as my tablet. Battery life is started at 10 hours. That’s acceptable for my commutes but I’d have to carry a charger for vacations.

My conclusions

I really want to have a single ebook reader but my perfect device isn’t out there just yet. I want a device that can easily read all formats, has great battery life, is small enough to be carried easily, can be read in all light conditions, has Internet connectivity through WiFi or 3G, and is affordable. Until that device is available I’ll be using my iPad for technical readings and my Kindle for my more causal readings.

Tuesday, July 27, 2010

Creating your own default trace

Are you aware of the default trace that SQL Server runs when it starts? Did you know it’s configurable?

No, you can’t edit it. But you can still run a trace on startup that captures only the events that you want.

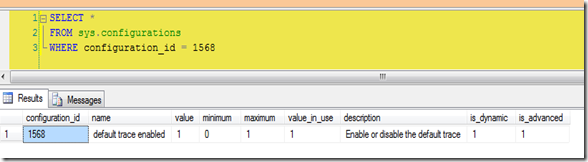

The first thing you should do is to see if the default trace is enabled. Simple enough, just run sp_configure with advanced options and look for 'default trace enabled'. Or query sys.configurations for configuration_id 1568.

SELECT *

FROM sys.configurations

WHERE configuration_id = 1568

On my laptop it is.

There’s a few ways to see what the trace is doing. First find the trace id. It should be 1, but you can make sure…

SELECT * FROM sys.traces

SELECT * FROM ::fn_trace_getinfo(0)

…then query fn_traace_geteventinfo(1) to see what events the default trace is looking at. You can see the descriptions of the events by joining to some system views.

SELECT tc.name AS [Category],

dt.eventid, te.name AS [Event Name],

dt.columnid, tcol.name AS [Column Name]

FROM ::fn_trace_geteventinfo(1) AS dt

INNER JOIN sys.trace_events AS te ON dt.eventid = te.trace_event_id

INNER JOIN sys.trace_columns AS tcol ON dt.columnid = tcol.trace_column_id

INNER JOIN sys.trace_categories AS tc ON te.category_id = tc.category_id

But what if, after looking at the events that the default trace is looking at, you decide to add to the list? Or maybe you want to skip some events? Remember, you can’t alter the default trace.

First disable the default trace. Use sp_configure, the change doesn’t require a restart. It will also close the trace.

sp_configure 'default trace enabled', 0;

go

reconfigure

Next create a stored procedure in the master database that will create and start your trace. Use sp_trace_create to generate a trace id, use sp_trace_setevent to select the events you want to capture, and finally start the trace with sp_trace_setstatus. Read more about creating traces here. If you aren’t familiar with creating traces via T-SQL use SQL Profiler to capture the events you need and set whatever properties you need then extract the trace properties to a script file.

Finally mark your new procedure to start when SQL does by using sp_procoption. This procedure takes 3 parameters; the name of your procedure, the option ‘startup’, and a true or false value. ‘Startup’ is the only option available, true sets to autostart, and false sets to not autostart.

In my demo script below I’ve created a trace that will track what a certain user does in the database as well as when they log in or out.

USE [master]

GO

CREATE PROCEDURE [dbo].[MyDefaultTrace]

AS

begin

declare @rc int

declare @TraceID int

declare @maxfilesize bigint

declare @filecount int

set @maxfilesize = 100

set @filecount = 5

exec @rc = sp_trace_create @TraceID output, 2,

N'C:\Perflogs\MyDefaultTrace',

@maxfilesize, NULL, @filecount

declare @on bit

set @on = 1

exec sp_trace_setevent @TraceID, 14, 7, @on

exec sp_trace_setevent @TraceID, 14, 23, @on

exec sp_trace_setevent @TraceID, 14, 8, @on

exec sp_trace_setevent @TraceID, 14, 64, @on

exec sp_trace_setevent @TraceID, 14, 1, @on

exec sp_trace_setevent @TraceID, 14, 9, @on

exec sp_trace_setevent @TraceID, 14, 21, @on

exec sp_trace_setevent @TraceID, 14, 25, @on

exec sp_trace_setevent @TraceID, 14, 41, @on

exec sp_trace_setevent @TraceID, 14, 49, @on

exec sp_trace_setevent @TraceID, 14, 57, @on

exec sp_trace_setevent @TraceID, 14, 2, @on

exec sp_trace_setevent @TraceID, 14, 6, @on

exec sp_trace_setevent @TraceID, 14, 10, @on

exec sp_trace_setevent @TraceID, 14, 14, @on

exec sp_trace_setevent @TraceID, 14, 26, @on

exec sp_trace_setevent @TraceID, 14, 66, @on

exec sp_trace_setevent @TraceID, 14, 3, @on

exec sp_trace_setevent @TraceID, 14, 11, @on

exec sp_trace_setevent @TraceID, 14, 35, @on

exec sp_trace_setevent @TraceID, 14, 51, @on

exec sp_trace_setevent @TraceID, 14, 12, @on

exec sp_trace_setevent @TraceID, 14, 60, @on

exec sp_trace_setevent @TraceID, 15, 7, @on

exec sp_trace_setevent @TraceID, 15, 15, @on

exec sp_trace_setevent @TraceID, 15, 23, @on

exec sp_trace_setevent @TraceID, 15, 8, @on

exec sp_trace_setevent @TraceID, 15, 16, @on

exec sp_trace_setevent @TraceID, 15, 64, @on

exec sp_trace_setevent @TraceID, 15, 9, @on

exec sp_trace_setevent @TraceID, 15, 17, @on

exec sp_trace_setevent @TraceID, 15, 41, @on

exec sp_trace_setevent @TraceID, 15, 49, @on

exec sp_trace_setevent @TraceID, 15, 57, @on

exec sp_trace_setevent @TraceID, 15, 6, @on

exec sp_trace_setevent @TraceID, 15, 10, @on

exec sp_trace_setevent @TraceID, 15, 14, @on

exec sp_trace_setevent @TraceID, 15, 18, @on

exec sp_trace_setevent @TraceID, 15, 26, @on

exec sp_trace_setevent @TraceID, 15, 66, @on

exec sp_trace_setevent @TraceID, 15, 3, @on

exec sp_trace_setevent @TraceID, 15, 11, @on

exec sp_trace_setevent @TraceID, 15, 35, @on

exec sp_trace_setevent @TraceID, 15, 51, @on

exec sp_trace_setevent @TraceID, 15, 12, @on

exec sp_trace_setevent @TraceID, 15, 60, @on

exec sp_trace_setevent @TraceID, 15, 13, @on

exec sp_trace_setevent @TraceID, 15, 21, @on

exec sp_trace_setevent @TraceID, 43, 7, @on

exec sp_trace_setevent @TraceID, 43, 15, @on

exec sp_trace_setevent @TraceID, 43, 8, @on

exec sp_trace_setevent @TraceID, 43, 64, @on

exec sp_trace_setevent @TraceID, 43, 1, @on

exec sp_trace_setevent @TraceID, 43, 9, @on

exec sp_trace_setevent @TraceID, 43, 41, @on

exec sp_trace_setevent @TraceID, 43, 2, @on

exec sp_trace_setevent @TraceID, 43, 10, @on

exec sp_trace_setevent @TraceID, 43, 3, @on

exec sp_trace_setevent @TraceID, 43, 11, @on

exec sp_trace_setevent @TraceID, 43, 35, @on

exec sp_trace_setevent @TraceID, 43, 51, @on

exec sp_trace_setevent @TraceID, 43, 12, @on

exec sp_trace_setevent @TraceID, 43, 5, @on

exec sp_trace_setevent @TraceID, 43, 13, @on

exec sp_trace_setevent @TraceID, 43, 6, @on

exec sp_trace_setevent @TraceID, 43, 14, @on

exec sp_trace_setevent @TraceID, 43, 62, @on

exec sp_trace_setevent @TraceID, 45, 7, @on

exec sp_trace_setevent @TraceID, 45, 8, @on

exec sp_trace_setevent @TraceID, 45, 64, @on

exec sp_trace_setevent @TraceID, 45, 1, @on

exec sp_trace_setevent @TraceID, 45, 41, @on

exec sp_trace_setevent @TraceID, 45, 10, @on

exec sp_trace_setevent @TraceID, 45, 3, @on

exec sp_trace_setevent @TraceID, 45, 11, @on

exec sp_trace_setevent @TraceID, 45, 35, @on

exec sp_trace_setevent @TraceID, 45, 51, @on

exec sp_trace_setevent @TraceID, 45, 12, @on

exec sp_trace_setevent @TraceID, 45, 5, @on

exec sp_trace_setevent @TraceID, 45, 13, @on

exec sp_trace_setevent @TraceID, 45, 6, @on

exec sp_trace_setevent @TraceID, 45, 14, @on

exec sp_trace_setevent @TraceID, 45, 62, @on

exec sp_trace_setevent @TraceID, 45, 15, @on

exec sp_trace_setevent @TraceID, 41, 7, @on

exec sp_trace_setevent @TraceID, 41, 15, @on

exec sp_trace_setevent @TraceID, 41, 8, @on

exec sp_trace_setevent @TraceID, 41, 64, @on

exec sp_trace_setevent @TraceID, 41, 1, @on

exec sp_trace_setevent @TraceID, 41, 41, @on

exec sp_trace_setevent @TraceID, 41, 10, @on

exec sp_trace_setevent @TraceID, 41, 3, @on

exec sp_trace_setevent @TraceID, 41, 11, @on

exec sp_trace_setevent @TraceID, 41, 35, @on

exec sp_trace_setevent @TraceID, 41, 51, @on

exec sp_trace_setevent @TraceID, 41, 12, @on

exec sp_trace_setevent @TraceID, 41, 5, @on

exec sp_trace_setevent @TraceID, 41, 13, @on

exec sp_trace_setevent @TraceID, 41, 6, @on

exec sp_trace_setevent @TraceID, 41, 14, @on

-- Set the Filters

declare @intfilter int

declare @bigintfilter bigint

exec sp_trace_setfilter @TraceID, 11, 0, 6, N'BadUser'

exec sp_trace_setstatus @TraceID, 1

end

GO

EXEC sp_procoption N'MyDefaultTrace', 'startup', '1'

GO

Now when I check to see what traces are running trace id #1 is the trace created in my procedure. The trace start is also written to the SQL error log when the master database starts.

As always, please test this on a server you can play with before putting into production.

Wednesday, July 21, 2010

Winning the DBA Open

Last weekend I, like millions of other golf fans around the world, watched as Louis Oosthuizen, an unknown (at least to me) golfer out of South Africa, won The Open Championship at St Andrews by an astounding 7 strokes. He showed confidence in his abilities throughout the tournament, never losing his composure playing in some of the toughest conditions on any course in the world. Congratulations, Louis!

As i watched the game, I began to think that being a DBA and being a champion golfer weren’t that different. Both need to be confident in their abilities, both need to have a solid game plan, and both have to be able to execute their craft under pressure, being prepared for any eventuality.

Preparing for the tournament

A good golfer gets to the course early, days before the tournament begins. They play the course, talk to locals to learn any particular quirks the course may have, and practices hundreds of shots of different lengths or difficulties.

Good DBAs do the same. They find out about the host server, talking to the system team or SAN administrator; what kind of disk subsystem is there? what’s the memory or CPU like? does the server meet the requirements for SQL?

You drive for show…

The golfer starts his round on the tee. He’ll determine the club to use based on experience and conditions; where’s the wind coming from? how long is the hole? where’s the danger spots? A well hit drive will end up far down the fairway, perfectly placed for his next shot.

The DBA does the same when he sets up the server. His experience comes into play knowing what has to be done, and server conditions will help him to correctly place the system databases and data and log files for other databases. He’ll know what options to set and what to set them to. His server will be set up to optimal conditions, perfectly placed for hosting the company data.

…the approach shot…

Now the golfer will try to get his ball onto the green. He’ll again choose the right club, line up the shot, take a practice swing or two, then hits his best shot. He’ll know how his ball will fly because he has hit the same shot hundreds of times before so he can compare against past performance.

The DBAs approach shot is to benchmark his system. He should know how the server will perform during peak and non-peak times.

---getting out of a hazard…

Sometimes, though, the golfer’s approach shot is mishit. Or maybe a gust of wind holds up the shot and it lands in a greenside bunker. A good golfer knows how to get out of those situations and save par.

Similarly, a DBA must know how to get out of disaster scenarios. He knows he has set up and is maintaining a proper backup strategy and has practiced recovering databases until he knows the procedure inside and out. He knows the different options available for DBCC CHECKDB.

---and you putt for dough

This is where the good golfers shine. They don’t fade when standing over a three foot putt with the tournament on the line. They know they’ve made the same putt over and over before, and they’ve practiced for just this occasion. They read the putt’s line, judge the speed, and with millions of eyes watching they calmly knock it hit it center cup.

The DBA also is good under pressure. They’ve written and checked over their scripts, and they’re confidant they’ll perform as written. They don’t wilt when the CIO is standing over their shoulder asking why performance is so bad on their server.

The caddy…

All good golfers know the value of their caddy. A caddy’s job is to help his golfer, pointing out the course yardage, offering the right club, taking some of the pressure of the golfer. Sometimes the caddy is also a teacher, pointing out flaws in the golfer’s swing.

A DBA also needs a caddy. Their caddy can be a mentor or coworker at work. They can use social networks for advice on specific problems or as a sounding board.

Preparing for the next tournament

The golfer is always practicing. He’s at the driving range on his day off, constantly working on correcting swing flaws and working on new shots.

Likewise the DBA is constantly learning. They attend their local PASS chapter meetings or go to national conventions. They’re constantly reading blogs and whitepapers, learning new skills to help them manage their servers or help their company.

The moral? Like in golf, or any endeavor, the secret of being a good DBA is to be prepared. Learn your craft. Ask your caddy when you have questions. Practice when you’re able. Keep learning. And you too can win the DBA Open.

Monday, July 19, 2010

What I learned today – importing mixed data types from Excel

Recently a coworker came up with an issue I never ran into before. He’s importing a large number of Excel spreadsheets into a SQL 2005 instance. One of the columns contains mixed data; 1, 2, 3, x, y, z for example. What he found is that if the first 20 or so rows are strictly numeric then the connection treats the whole column as numeric, and alphanumeric values are returned as NULLs. He’s querying using OPENROWSET, but it looks like this happens in SSIS as well.

What his research found was that there’s a few registry keys that come into play. There’s also another property he needed to set in his connection string. When he adds “IMEX=1” it allows importing of mixed data types based on the the registry setting “ImportMixedTypes”. The default value is “text”. One drawback to text is that you’re limited to 255 characters. The only other available value is “Majority Type”. When you use Majority Type some of the values are scanned and the data type with the most values is used.

The number of rows scanned is based on the “TypeGuessRow” registry setting. By default it’s set to 8, but valid values are 0 to 16. If you set it to 0 then all rows are sampled. Setting it to a value between 1 and 16 samples that number.

Both keys can be found at HKEY_Local_Machine/Software/Microsoft/Jet/4.0/Engines/Excel/.

Here are a few articles that explain the issue better.

http://jingyang.spaces.live.com/blog/cns!CC21A118B1B5250!318.entry?sa=781031802

http://www.instantpages.ltd.uk/ADODB_WP.htm

UPDATED 7/20/2010: You can read more about the actual issue here.

Wednesday, July 14, 2010

Changing the name of your SQL server

My company recently changed their standard naming conventions for computers, so yesterday I had to rename my workstation. Usually this isn’t a big deal, except that I’m running locally a default instance of SQL 2005 and a named instance of SQL 2008.Again, not a big deal since this is just my local playground. But I wanted to sync up the names.

Let’s say that my laptop was named “CHICAGO”. That makes the default instance also “CHICAGO'”, and my named instance “CHICAGO\KATMAI”. Now my laptop name changed to “NEWCHICAGO”. My SQL instances stay as “CHICAGO” and “CHICAGO\KATMAI”. How do you change them to match the new computer name?

Couldn’t be simpler, just execute two procedures. For the default instance.

USE master;

GO

EXEC sp_dropserver 'CHICAGO';

GO

EXEC sp_addserver 'NEWCHICAGO', local;

GO

It’s the same for a named instance. Just add the instance name.

USE master;

GO

EXEC sp_dropserver 'CHICAGO\KATMAI';

GO

EXEC sp_addserver 'NEWCHICAGO\KATMAI', local;

GO

Then, just restart the SQL service and you should see the name change.

Note, though, that this only changes the server name, not the instance name. I haven’t had an opportunity to try that yet.

Tuesday, July 13, 2010

Another use for PowerShell and SQL

A few months ago I wrote a PowerShell script that looped through servers and databases to save the properties inside an Excel spreadsheet (PowerShell and SQL). I thought it’s time to add another one.

One of the daily tasks I set up for myself as a SQL DBA is to go through the error logs for anything that may be an indicator of a problem. This is especially true on Sunday afternoons or Monday mornings, after my weekly DBCC CHECKDB job runs. I’ve configured the jobs to email me the results, but since I’m monitoring 10 instances they can be time consuming to read through. I usually just cut to the bottom, where DBCC reports the number of errors it finds. If the number of errors is 0, all is well and good. Otherwise, of course, further investigation is needed.

This seemed like a natural for a new PowerShell script. One of the great strengths of PowerShell is it’s ability to connect to as many remote servers as needed. And since I knew that the DBCC CHECKDB summary is written in the error log all I need to do is to read the logs. I had read this post by Buck Woody (blog | twitter) about doing almost exactly what I wanted to do. I merged his script with my earlier script and made a few other changes. My final code looks like this:

[System.Reflection.Assembly]::LoadWithPartialName('Microsoft.SqlServer.SMO') | out-null

#Get SQL account information

$credential = Get-Credential

$loginName = $credential.UserName -replace("\\","")

$password = $credential.Password

#Get variables

$logDate = read-host("Enter log date")

$searchPhrase = "*"

$searchPhrase += read-host("Enter search phrase")

$searchPhrase += "*"

$srvlist = @(get-content "C:\Power\SQL_Servers.txt")

#Loop through all instances

foreach ($instance in $srvlist)

{

$instance

$srv = New-Object ('Microsoft.SqlServer.Management.Smo.Server') $instance

$srv.ConnectionContext.LoginSecure = $false

$srv.ConnectionContext.set_Login($loginName)

$srv.ConnectionContext.set_SecurePassword($password)

$srv.ReadErrorLog(0) | where {$_.LogDate -gt $logDate -and $_.Text -like $searchPhrase}

}

Since I don’t always access the servers from a computer on the domain, I added login credentials. You can remove these if you want to use Windows authentication. After that I read a date and search phrase I type in. I found that this can come in handy when I want to change what I’m searching for. For instance, I can enter the phrase “DBCC CHECKDB” and the script will return the summaries based on the date I entered. Or I can search for “BACKUP” or “ERROR”, whatever my heart desires.

Tuesday, July 6, 2010

Stop SQL Agent when enabling Service Broker on msdb

I forget this every time I set up a new server.

When I set up database mail on anew server I usually get the message “Service Broker is not enabled on msdb. Do you want to enable it?” Clicking OK gives me an unresponsive SQL server. Running the command “ALTER DATABASE msdb SET Enable_Broker” runs forever with no message returned.

What I forget each and every time is that the SQL Agent is running. Checking the locks or running sp_who2 shows that my ALTER DATABASE is blocked by the process running the Agent. Simply stopping the Agent allows the statement to finish, whether from the query window or clicking OK in the dialog box mentioned earlier. Then I just restart the Agent and everything’s hunky-dory again.

Maybe now I’ll remember it.

Monday, July 5, 2010

My favorite SQL features

Recently I ran across a contest sponsored by Microsoft SQL Server MVP Ken Simmons (Blog | Twitter). One of the ways to enter the contest was to create a blog post listing favorite SQL features. But how do you select a favorite? That’s like asking someone to choose a favorite song. Like most things the the SQL world, the correct answer is “it depends”, usually on what I’m trying to do at the time.

So here’s my top 10 favorite SQL features and tips, in the order that they come to mind. I’m ignoring features I’m not currently using, like backup compression and data encryption, and limiting myself to those I use the most often.

- Integration with PowerShell. This is a great feature to administer multiple SQL instances easily. For those who haven’t been on the internet in the last few years, PowerShell is Microsoft’s new scripting language based on the .NET framework. And starting with SQL 2008, PowerShell became integrated with SQL. What does this mean to you as a DBA? Well, imagine yourself as the new head DBA for your company, and you’re now in charge of 100 SQL instances. Your boss tells you that your first task is to standardize all instances. How can you quickly view the properties of each server? With PowerShell and remoting, you can easily query each instance and save the results to an Excel spreadsheet for easy analysis. I gave an example of how I do it in an earlier blog post here.

- Dynamic Management Views and Functions (DMV). Introduced with SQL 2005, DMVs are a great way to see how your server is performing. I’m not going to go into them in depth here since they’ve been written about by many others much better than I can. If you’re not familiar with them, do yourself a favor and start reading about them. They’ll make your life much easier.

- Storing queries in the toolbox. Like all DBAs, I’ve got a number of queries that I run over and over again. There’s numerous ways to store these for easy access, but my favorite for short one-line queries is to store them on the toolbox. Simply open the toolbox (Ctrl + Alt + x) and drag your query over. To recall it, open the toolbox, copy the desired query, and paste it into a new query window.

- Policies. Introduced in SQL 2008, Policy Based Management allows DBAs to manage multiple servers from a central server. Policies can standardize server configurations and check them for compliance.

- Report Services. Built-in reporting capabilities for the same price as the database engine. ‘nuff said.

- IntelliSense. This feature gives the same statement completion that you see in Visual Studio, not surprising since SQL Management Studio uses the same IDE and Visual Studio. Unfortunately it doesn’t work against 2005 servers or earlier. It did in the earlier betas but was removed in the final release. Hopefully Microsoft will put it back.

- Object Explorer Details. You can now see details of different database objects using the details view (F7). It’s also configurable. Just right click on the screen and choose what columns you want to see.

- Running queries against multiple instances. If you set up Registered Groups and register SQL instances by groups, you can execute a query against all servers in the group. You can select all groups, but you can’t choose to ignore instances inside the group. It’s all or nothing. But still, a significant timesaver when you need to run multiple statements against multiple instances.

- Setting variable values. One of the nice things in the .NET world is the ability to use easily change the value of a variable; i += 1 for instance. Now you can do that in SQL too. Instead of

- SQL Community. Not technically part of SQL itself, but I feel it’s important nevertheless. I first began learning about SQL on my own and quickly found myself overwhelmed. There was no time to learn at work, and I needed to find the knowledge to do my job. I found the Chicago SQL User Group and found what I needed; experienced DBAs volunteering to talk about a subject they love. ChiSUG lead me to PASS, an international group with tons of free resources. I began following blogs written by the same people authoring the articles on PASS and presenting at the group meetings. Reading these blogs lead me to twitter, where I can follow SQL experts in real-time and ask for help with the #SQLhelp tag. Finally there’s SQL Saturdays, day-long events with SQL experts speaking on various topics. Notice the recurring theme about these resources; they are all free. I’m constantly amazed by the amount of content that SQL DBAs and developers make available for no cost, and the time they put in as volunteers at in-person events. To the entire SQL community, thank you. I wouldn’t be where I am today without your help.

SET @i = @i + 1you can now do it like this

SET @i += 1Tuesday, June 29, 2010

PIVOT compared to CASE for a crosstab result

Yesterday I was playing with a test query for a coworker using the PIVOT keyword when it dawned on me that I never compared it to using CASE statements. So, here’s the requirements.

There is a table called lab_results, with a partial DDL statement below

CREATE TABLE [dbo].[lab_results](

[lab_result_id] [char](12) NOT NULL,

[patient_id] [char](8) NOT NULL,

[test_code] [numeric](4, 0) NOT NULL,

[sample_date] [datetime] NULL,

.............................

.............................

CONSTRAINT [PK_lab_results] PRIMARY KEY CLUSTERED

(

[lab_result_id] ASC

) ON [PRIMARY]

CREATE NONCLUSTERED INDEX [IX_lab_results_3] ON [dbo].[lab_results]

(

[test_code] ASC,

[sample_date] ASC

) ON [Secondary]

This table has approximately 18 million rows, and you need to get a count of test codes during each year. Anything prior to 1/1/2006 would be counted together. The data would look something like this

Prior to SQL 2005, I would use a series of CASE statements to perform a count of each test code

SELECT test_code

, SUM(CASE WHEN DATEPART(yy, sample_date) = 2010 THEN 1 END) AS [2010]

, SUM(CASE WHEN DATEPART(yy, sample_date) = 2009 THEN 1 END) AS [2009]

, SUM(CASE WHEN DATEPART(yy, sample_date) = 2008 THEN 1 END) AS [2008]

, SUM(CASE WHEN DATEPART(yy, sample_date) = 2007 THEN 1 END) AS [2007]

, SUM(CASE WHEN DATEPART(yy, sample_date) = 2006 THEN 1 END) AS [2006]

, SUM(CASE WHEN DATEPART(yy, sample_date) < 2006 THEN 1 END) AS [Pre2006]

FROM lab_results

GROUP BY test_code

Using the new (to SQL 2005 anyway) PIVOT keyword, I could rewrite the query like this

SELECT test_code, [2010], [2009], [2008], [2007], [2006], [2005] AS Pre2006

FROM

(

SELECT test_code, sample_date

, CASE WHEN DATEPART(yy, sample_date) < 2006 THEN 2005

ELSE DATEPART(yy, sample_date)

END AS LabResultYear

FROM lab_results

) AS tmp

PIVOT (COUNT(sample_date) FOR LabResultYear IN ([2010], [2009], [2008], [2007], [2006], [2005])) AS p;

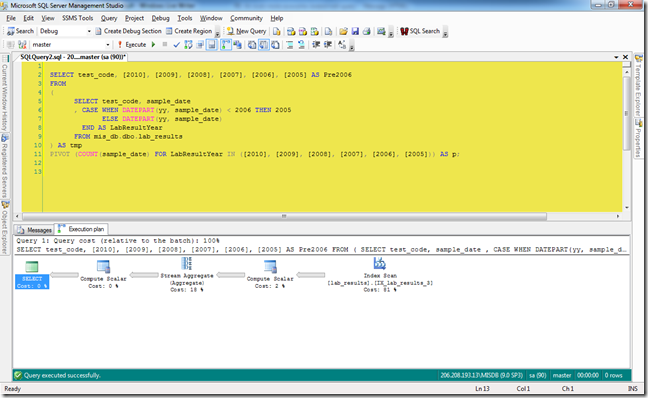

But I was never looked at the execution plan before yesterday to see how each was run. So I finally did, and here’s what I saw. First the plan for the CASE statement…

…and then the plan for the PIVOT example.

At first glance they seem identical. Both are using the same operators and both are using an index seek on the non-clustered index. However there are small differences. The CASE statement completes 2 seconds faster than the PIVOT query, 45 seconds to 47. The index seek,while having an overall higher percentage of the whole, has the same operator costs as the PIVOT. The difference shows in the Stream Aggregate operator. The CASE operator is 10.8, which is 10% of the whole query. In the PIVOT example, the Stream Aggregate is 18% of the whole, and the operator cost is 19.8.

One other point about the PIVOT keyword. If you compare my code snippets to the screenshots of the executions, you’ll notice that I’m actually running against the master database and using a fully qualified name. If I ran it directly against the database where the table lives it would fail. Why? because that database, while running in on a SQL 2005 server, is still at compatibility level 7 (I know,I know). It doesn’t work unless you’re at level 9 or higher.

Monday, June 28, 2010

Report Server issues

I’ve had a lot of Report Server issues in the last few months. I thought we solved it once, only to see it come up again in a slightly different way. But I think I can put it all to bed now.

The first time was a few months ago, right when I left on vacation (isn’t that always the way?). The C drive on one of our reporting servers began to run out of space, filling up 6 – 8 GB in a matter of hours.

Before I get too far ahead of myself, let me describe our layout. We have four regional databases running SQL 2005 Standard Edition, each with data for that region. Two regions are in our corporate headquarters, the other two are in my office. We also have two SQL 2008 Standard Edition servers that run in a virtual environment, one in each datacenter. The reports hosted on each reporting center are identical except for their data sources; the corporate report server fetches data from the two corporate databases, and the local server does the same. The issue we had was on the server in the corporate datacenter.

Our first step was to check the temp files. We noticed a few small files and one massive, 8 GB file that we couldn’t delete without stopping and restarting the report service. A few hours later the massive file reappeared. All of these temp files were prefixed “RSTemp_”.

We moved the location of the temp files to a larger drive to give us more time before the largest temp file grew too large. By default the location is on C:\Program Files\Microsoft SQL Server\MSRS10.<<Instance Name>>\Reporting Services. You can change it by modifying the rsreportserver.config file and adding the entry to the <Service> tag:

<Service>

---

<FileShareStorageLocation>

<Path>I:\RSTempFiles</Path>

</FileShareStorageLocation>

</Service>

That gave us extra space, but it didn’t solve the issue. A little more digging showed that we had a long running report that was also getting bad data. The query for the report was looking for a scalar value but was returning multiples. The query was also a long running query. What we found was happening was that the report execution was timing out, but the query was still active. The user didn’t see the timeout and constantly tried to re-run the report. Consequently this report was continuously paged out to disk, and it never died. Once we cleaned up the data and fixed the reports query, the problem hasn’t reappeared.

The next issue was related to performance. We noticed at certain times of day the report server would get really sluggish. Reports that would complete in 10 seconds would now take 10 minutes. Looking at the server resources showed no increase in CPU, IO, or memory usage. We didn’t see a repeat of the super-big temp file, though there was an increase in the number of temp files.

A little more investigation showed that, when the performance began to degrade, there were locks being held by one process clearing the sessions that blocked another process that was writing session data. We were finally able to track this to a third party application we use to monitor performance. It didn’t look like the software was configured properly.

In investigating both of these issues we looked at the data in the ExecutionLogStorage table in the ReportServer database. If you’re not familiar with this table, you should check out Robert Bruckner’s excellent post on ExecutionLog2 View - Analyzing and Optimizing Reports.

In the first issue, we were able to identify the report by seeing the pagination values in the additional information data. In the second, we could spot when the slowness would begin by seeing the TimeProcessing values rise.

Friday, May 14, 2010

Catching up

First, my laptop went kablooie a few weeks back. I've been using a Pavilion TX 2000 that I ordered custom from HP for the past few years. I chose this model because I wanted a computer that could run the old Tablet XP OS, and I loved the swivel top that let me use it as either a notepad or as a full blown laptop (Apple, you need to add the ability to write on the iPad). Anyway, a few weeks ago my pc wouldn't boot at all, just kept giving me a black screen. I couldn't even get to the BIOS screen or boot from a CD. Luckily I had also bought an extended warranty that was still in effect. So, after opening a ticket with HP, I shipped the unit back to them, and they had it back to me about 10 days later. I was without the laptop for about 2 1/2 weeks, of which 1 was vacation time anyway. And not counting the week between when the unit first went bad and I shipped it back, I hardly missed it.

But I did miss it. I work mostly on my laptop at work and on my commute. This is my lab, where I can install my own programs and other beta apps. It's also the machine I blog from, mostly while on my commute. So it's good to have it back. I'm just finishing installing SQL 2008 R2, VS 2010, and Office 2010. I should be good to go by the time I go back to work on Monday.

Speaking about work, I did mention that I'm just finishing up a two-week vacation. The first week my wife and I took a cruise to the Mexican Riviera. We had a wonderful time. Perfect weather, great scenery, wonderful people. I'd like to go back soon and see some of the sights we missed this time around. This second week is just relaxing, doing yard work, mostly taking it easy.

Next week I'll write about an issue I ran across with our report server just before I left.

Thursday, April 15, 2010

What I learned tonight – tempdb size when SQL starts

I went to the April monthly meeting of the Chicago SQL Server User Group tonight to see Brad McGehee (Blog | Twitter). Brad’s in town to present at SQLSaturday # 31 in Chicago and he also gave a talk to us tonight on optimizing tempdb performance.

It was a good session because it went over tempdb and best practices. Most of the information I already knew but it’s always good to hear it again, even as a refresher. And there’s usually something I took for granted but was wrong about. And tonight was no exception.

I knew that the data and log files for tempdb are recreated when the SQL service starts. But I thought that tempdb got it’s db options from the model database. For instance, if the model database is set at 2 MB for the data and 1 MB for the log then tempdb would inherit those same settings. But i was wrong. As Brad mentioned tonight, tempdb starts out as 8 MB for the data and the log file is 1 MB. On my laptop its’s actually 512 KB but maybe I heard him wrong.

Anyway, to properly resize tempdb you should run the ALTER DATABASE command…

USE master;

GO

ALTER DATABASE tempdb

MODIFY FILE ( NAME = 'tempdev', SIZE = 25600KB , FILEGROWTH = 5120KB );

GO

ALTER DATABASE tempdb

MODIFY FILE ( NAME = 'templog', SIZE = 2048KB , FILEGROWTH = 1024KB );

GO

…and tempdb will be correctly resized after every restart. And you’ve heard that you should create multiple tempdb files depending on the number of CPU’s you have? Well, just do that at the same time. Here I’m renaming, moving, resizing, and adding a file to tempdb;

USE master;

GO

ALTER DATABASE tempdb

MODIFY FILE (NAME = 'tempdev', NEWNAME = 'tempdev1', FILENAME = 'C:\SQL2008\DATA\tempdev1.mdf');

GO

ALTER DATABASE tempdb

MODIFY FILE (NAME = 'templog', FILENAME = 'C:\SQL2008\DATA\templog.ldf');

GO

ALTER DATABASE tempdb

MODIFY FILE (NAME = 'tempdev1', SIZE = 25600KB , FILEGROWTH = 5120KB);

GO

ALTER DATABASE tempdb

MODIFY FILE (NAME = 'templog', SIZE = 2048KB , FILEGROWTH = 1024KB);

GO

ALTER DATABASE tempdb

ADD FILE (NAME = 'tempdev2',

FILENAME = 'C:\SQL2008\DATA\tempdev2.ndf',

SIZE = 25600KB , FILEGROWTH = 5120KB);

GO

The usual disclaimers go here. Don’t use this script without understanding it as it can screw up your server. Don’t use it in production until you’ve testes it.

And I’ll leave the discussion of multiple tempdb files for now.

Tuesday, April 13, 2010

SQL error log finds all copies of resource db after moving db

Just a short post about something interesting I saw in the SQL error log a while back.

A while ago, I moved the system databases off the C drive’s of our servers to another drive on the SAN. That included moving the resource database. You can read Microsoft’s recommendations on moving system db’s on MSDN.

Anyway, I copied the mssqlsystemresource.mdf and mssqlsystemresource.ldf files to a backup location in case of an emergency. But only one database was attached.

This interesting entry in the SQL error log was after I restarted the server:

The resource database has been detected in two different locations. Attaching the resource database in the same directory as the master database at 'O:\SQL2005\DATA\master.mdf' instead of the currently attached resource database at 'O:\SQL2005\Data\mssqlsystemresource.mdf'.

So, SQL saw both the attached db and the unattached backup copy when I restarted the service. The only time I saw the message was the first reboot, not any subsequent restarts.

Wednesday, March 24, 2010

Using the Inserted and Deleted tables outside of a trigger

Just a simple little post about a conversation about triggers I had earlier today with a developer, specifically using them to audit update and delete statements against a table. During our talk I mentioned that, starting in SQL 2005, the inserted and deleted tables were available outside of a trigger. He wasn’t aware of that until I mentioned it. So, for anyone else who’s not familiar with the inserted and deleted tables, here’s a little summary.

Briefly, when you insert, update, or delete records from a table, SQL stores those records in a virtual table; inserted for inserts and deleted for updates and deletes. These tables are available inside of table triggers. Microsoft SQL Books Online has an article explaining the use of the inserted and deleted tables inside of triggers; read that first as a refresher. In our shop we use them for monitoring who is updating and deleting certain critical tables, and we can roll back changes if necessary.

Starting with SQL 2005, you can use the OUTPUT clause to access the inserted and deleted tables outside of DML triggers. You can read the article in Books On Line.

Here’s a simple demo script to run. I added a primary key and a non nullable column to show what happens in error conditions.

USE tempdb;

GO

SET NOCOUNT ON;

GO

-- Create a testing table

USE [tempdb]

GO

IF EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[TestOutput]') AND type in (N'U'))

DROP TABLE [dbo].[TestOutput]

GO

USE [tempdb]

GO

CREATE TABLE [dbo].[TestOutput](

[Field1] [int] NOT NULL,

[Field2] [char](1) NOT NULL,

CONSTRAINT [PK_TestOutput] PRIMARY KEY CLUSTERED

(

[Field1] ASC

)ON [PRIMARY]

) ON [PRIMARY]

GO

--Testing of Outupt statement

DECLARE @TestOutput_Audit TABLE

(

Field1 INT,

Field2 CHAR(1),

[Action] CHAR(1),

Action_by VARCHAR(25),

Action_Date DATETIME

)

-- Testing Insert

INSERT INTO TestOutput (Field1, Field2)

OUTPUT inserted.Field1, inserted.Field2, 'I', SUSER_NAME(), GETDATE()

INTO @TestOutput_Audit (Field1, Field2, [Action],Action_by, Action_Date)

VALUES (1, 'A')

INSERT INTO TestOutput (Field1, Field2)

OUTPUT inserted.Field1, inserted.Field2, 'I', SUSER_NAME(), GETDATE()

INTO @TestOutput_Audit (Field1, Field2, [Action],Action_by, Action_Date)

VALUES (1, 'A')

SELECT * FROM TestOutput

SELECT * FROM @TestOutput_Audit

-- Testing Update

UPDATE TestOutput

SET Field2 = 'B'

OUTPUT deleted.Field1, deleted.Field2, 'U', SUSER_NAME(), GETDATE()

INTO @TestOutput_Audit (Field1, Field2, [Action],Action_by, Action_Date)

WHERE Field1 = 1

UPDATE TestOutput

SET Field2 = NULL

OUTPUT deleted.Field1, deleted.Field2, 'U', SUSER_NAME(), GETDATE()

INTO @TestOutput_Audit (Field1, Field2, [Action],Action_by, Action_Date)

WHERE Field1 = 1

SELECT * FROM TestOutput

SELECT * FROM @TestOutput_Audit

-- Testing Delete

DELETE TestOutput

OUTPUT deleted.Field1, deleted.Field2, 'D', SUSER_NAME(), GETDATE()

INTO @TestOutput_Audit (Field1, Field2, [Action],Action_by, Action_Date)

WHERE Field1 = 1

SELECT * FROM TestOutput

SELECT * FROM @TestOutput_Audit

The INSERT statement simply inserts a single record into my table. Afterwards you can see the same record in the table as well as my audit table. The second record wasn’t added to either table because it’s a primary key violation.

Msg 2627, Level 14, State 1, Line 18

Violation of PRIMARY KEY constraint 'PK_TestOutput'. Cannot insert duplicate key in object 'dbo.TestOutput'.

The statement has been terminated.

Field1 Field2

----------- ------

1 A

Field1 Field2 Action Action_by Action_Date

----------- ------ ------ ------------------------- -----------------------

1 A I PERTELLT3\John 2010-03-24 17:58:03.407

I make a simple UPDATE. Now the table shows the update and the audit shows the value before the update took place. The second update fails because Field2 doesn’t allow NULL values; the main record isn’t updated nor added to the audit table.

Msg 515, Level 16, State 2, Line 33

Cannot insert the value NULL into column 'Field2', table 'tempdb.dbo.TestOutput'; column does not allow nulls. UPDATE fails.

The statement has been terminated.

Field1 Field2

----------- ------

1 B

Field1 Field2 Action Action_by Action_Date

----------- ------ ------ ------------------------- -----------------------

1 A I PERTELLT3\John 2010-03-24 17:58:03.407

1 A U PERTELLT3\John 2010-03-24 17:58:03.413

Finally, when I delete the record I can see the record is gone from the main table but stored in the audit table.

Field1 Field2

----------- ------

Field1 Field2 Action Action_by Action_Date

----------- ------ ------ ------------------------- -----------------------

1 A I PERTELLT3\John 2010-03-24 17:58:03.407

1 A U PERTELLT3\John 2010-03-24 17:58:03.413

1 B D PERTELLT3\John 2010-03-24 17:58:03.413