Yesterday I was playing with a test query for a coworker using the PIVOT keyword when it dawned on me that I never compared it to using CASE statements. So, here’s the requirements.

There is a table called lab_results, with a partial DDL statement below

CREATE TABLE [dbo].[lab_results](

[lab_result_id] [char](12) NOT NULL,

[patient_id] [char](8) NOT NULL,

[test_code] [numeric](4, 0) NOT NULL,

[sample_date] [datetime] NULL,

.............................

.............................

CONSTRAINT [PK_lab_results] PRIMARY KEY CLUSTERED

(

[lab_result_id] ASC

) ON [PRIMARY]

CREATE NONCLUSTERED INDEX [IX_lab_results_3] ON [dbo].[lab_results]

(

[test_code] ASC,

[sample_date] ASC

) ON [Secondary]

This table has approximately 18 million rows, and you need to get a count of test codes during each year. Anything prior to 1/1/2006 would be counted together. The data would look something like this

Prior to SQL 2005, I would use a series of CASE statements to perform a count of each test code

SELECT test_code

, SUM(CASE WHEN DATEPART(yy, sample_date) = 2010 THEN 1 END) AS [2010]

, SUM(CASE WHEN DATEPART(yy, sample_date) = 2009 THEN 1 END) AS [2009]

, SUM(CASE WHEN DATEPART(yy, sample_date) = 2008 THEN 1 END) AS [2008]

, SUM(CASE WHEN DATEPART(yy, sample_date) = 2007 THEN 1 END) AS [2007]

, SUM(CASE WHEN DATEPART(yy, sample_date) = 2006 THEN 1 END) AS [2006]

, SUM(CASE WHEN DATEPART(yy, sample_date) < 2006 THEN 1 END) AS [Pre2006]

FROM lab_results

GROUP BY test_code

Using the new (to SQL 2005 anyway) PIVOT keyword, I could rewrite the query like this

SELECT test_code, [2010], [2009], [2008], [2007], [2006], [2005] AS Pre2006

FROM

(

SELECT test_code, sample_date

, CASE WHEN DATEPART(yy, sample_date) < 2006 THEN 2005

ELSE DATEPART(yy, sample_date)

END AS LabResultYear

FROM lab_results

) AS tmp

PIVOT (COUNT(sample_date) FOR LabResultYear IN ([2010], [2009], [2008], [2007], [2006], [2005])) AS p;

But I was never looked at the execution plan before yesterday to see how each was run. So I finally did, and here’s what I saw. First the plan for the CASE statement…

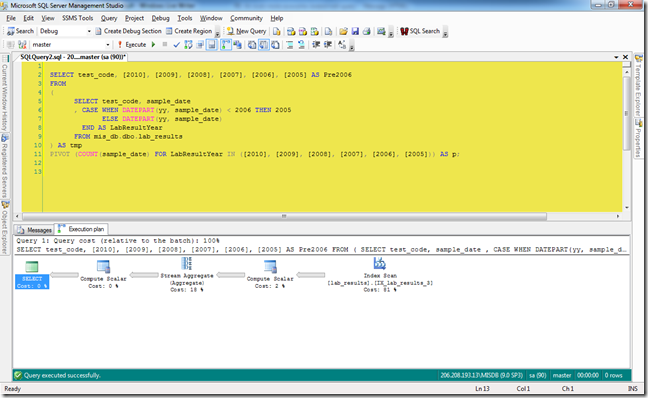

…and then the plan for the PIVOT example.

At first glance they seem identical. Both are using the same operators and both are using an index seek on the non-clustered index. However there are small differences. The CASE statement completes 2 seconds faster than the PIVOT query, 45 seconds to 47. The index seek,while having an overall higher percentage of the whole, has the same operator costs as the PIVOT. The difference shows in the Stream Aggregate operator. The CASE operator is 10.8, which is 10% of the whole query. In the PIVOT example, the Stream Aggregate is 18% of the whole, and the operator cost is 19.8.

One other point about the PIVOT keyword. If you compare my code snippets to the screenshots of the executions, you’ll notice that I’m actually running against the master database and using a fully qualified name. If I ran it directly against the database where the table lives it would fail. Why? because that database, while running in on a SQL 2005 server, is still at compatibility level 7 (I know,I know). It doesn’t work unless you’re at level 9 or higher.