My first attempt at a data warehouse is going to be collecting data from our report servers. In our environment we have two SQL 2008 instances that host the reports themselves, and they connect to four data centers for the report data. In the data centers we have a table that’s populated with parameters needed for each report; dates, locations, etc. The reports themselves only have two parameters; the datacenter where the data for the report is (we’re using dynamic connection strings) and a guid that identifies the other needed parameters in the data center.

My goal was to build my warehouse from the four data center report parameter tables and the Execution Log tables on the report servers. The report server logs information from each time a report is run; if it was successful, the parameters for the report, the user, and more that would be helpful in debugging a report performance. I wanted to be able to view summaries for each report; for instance how often each report was run for each location during a specified date range, average report durations, or the number of aborted reports.

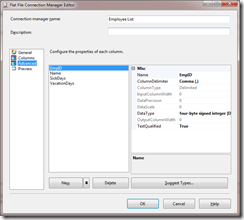

I was going to build an SSIS package to gather the data for me. Then I read an article in the November 2009 issue of SQL Server Magazine by Tyler Chessman that defined about half of what I want to do. In his article SQL Server Reporting Services Questions Answered, Mr Chessman describes sample reports from Microsoft that you can find on CodePlex. The reports will be interesting enough and I’ll be able to use them, but the best part is Microsoft has already created a package to extract the data from the report server execution log!

This post is meant to be an overview. I’ll post a review of the CodePlex samples soon, and I’ll start laying out my data warehouse design.