Back when I first started monitoring our production servers, I wanted a way to track the size and growth of all tables in our databases. At the time, we were really only worried about 1 legacy database, and all the objects in that database were in the dbo schema. So tracking size growth was pretty easy; I ran the following script against that database weekly and compared results.

declare @TableName sysname declare mySpace cursor for select name from sys.sysobjects where xtype = 'U' order by name open mySpace fetch next from mySpace into @TableName while @@fetch_status = 0 begin insert into PerfDB.dbo.TableSpace(TableName, NumRows, Reserved, DataUsed, IndexUsed, Unused) exec sp_spaceused @TableName fetch next from mySpace into @TableName end close mySpace deallocate mySpace

After a while, we began to create new projects that required new databases. And these databases had objects that were created into separate schemas. So now, if I wanted to track changes to all tables in all databases, I’d have to run the above code against all databases. Do-able, certainly, but not exactly what I wanted to maintain. I’d have to add the code to each new database we added to the server. And sp_spaceused only returns the name of the table, not the schema name. I wanted to separate the tables by database as well as schema.

So I played around with using two well known undocumented procedures, sp_MSForEachDB and sp_MS_ForEachTable. I’ve used them before, but I never nested foreachtable inside foreachdb. And I still needed a way to separate the schema from the table name. And I wanted to get away from using a cursor.

I found the following script on a SQLServerCentral forum that gave me an idea. Running this script showed that the value of ‘?’ when running sp_MSForEachTable showed the schema name as well as the table name.

declare @command1 varchar(8000) select @command1 = ' IF ''@'' <> ''master'' AND ''@'' <> ''model'' AND ''@'' <> ''msdb'' AND ''@'' <> ''tempdb'' begin use [@] execute sp_MSForEachTable ''print ''''?'''' '' end ' exec sp_MSforeachdb @command1, '@'

So now I can just run sp_spaceused inside the sp_MSForEachTable block and strip out the schema into a separate column.

Here’s the code I use now

CREATE TABLE #TableSpace ( DatabaseName nvarchar(128) NULL, SchemaName nvarchar(128) NULL, TableName nvarchar(128) NOT NULL, rows int NOT NULL, reserved varchar(15) NOT NULL, data varchar(15) NOT NULL, index_size varchar(15) NOT NULL, unused varchar(15) NOT NULL) DECLARE @SQL varchar(8000) SELECT @SQL = ' IF ''@'' <> ''master'' AND ''@'' <> ''model'' AND ''@'' <> ''msdb'' AND ''@'' <> ''tempdb'' BEGIN USE [@] EXECUTE sp_MSForEachTable ''INSERT INTO #TableSpace (TableName, rows, reserved, data, index_size, unused) EXEC sp_spaceused ''''?''''; UPDATE #TableSpace SET SchemaName = LEFT(''''?'''', CHARINDEX(''''.'''', ''''?'''', 1) - 2) WHERE SchemaName IS NULL; UPDATE #TableSpace SET DatabaseName = ''''@'''' WHERE DatabaseName IS NULL; '' END ' EXEC sp_MSforeachdb @SQL, '@'

In my example I’m creating a temporary table, but in practice I use a permanent table. Line 16 is obvious, it skips the system databases. Line 18 calls the sp_spaceused procedure for each table inside each database.

Line 19 is where I strip out the schema name. I just use CHARINDEX function to return the position of the dot separator, then I use the LEFT function to return the characters before it. Since ‘?’ returns the table name as [Schema].[TableName] I also want to skip the brackets, so I move the position one more place to the left to take care of the ] character. I could have been even fancier and did even more string manipulation to take care of the [ character, but it was just as easy to use the REPLACE function on the whole column.

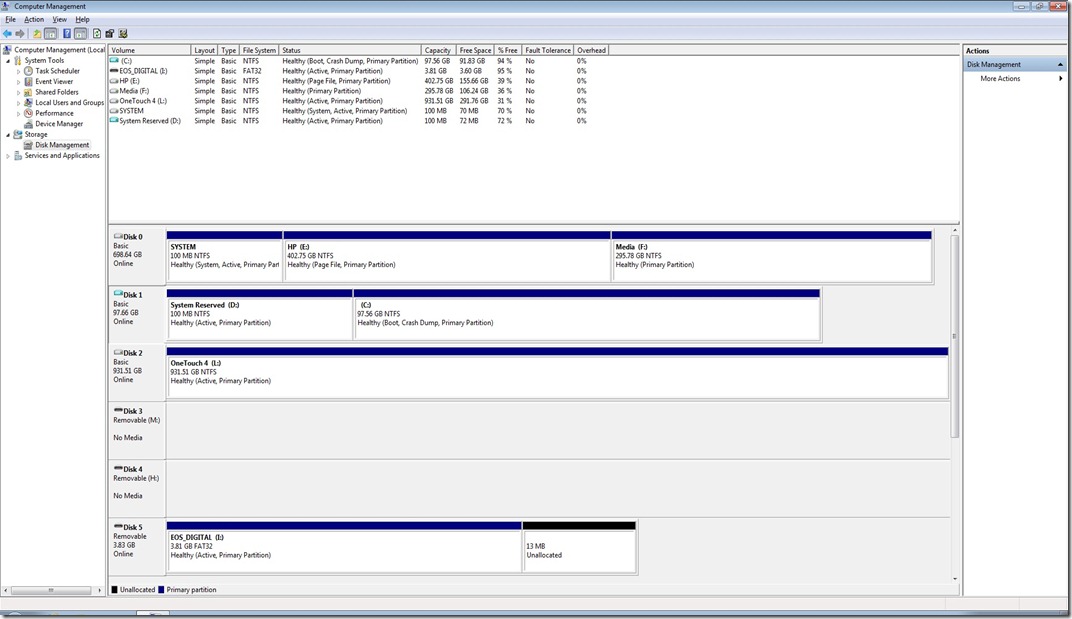

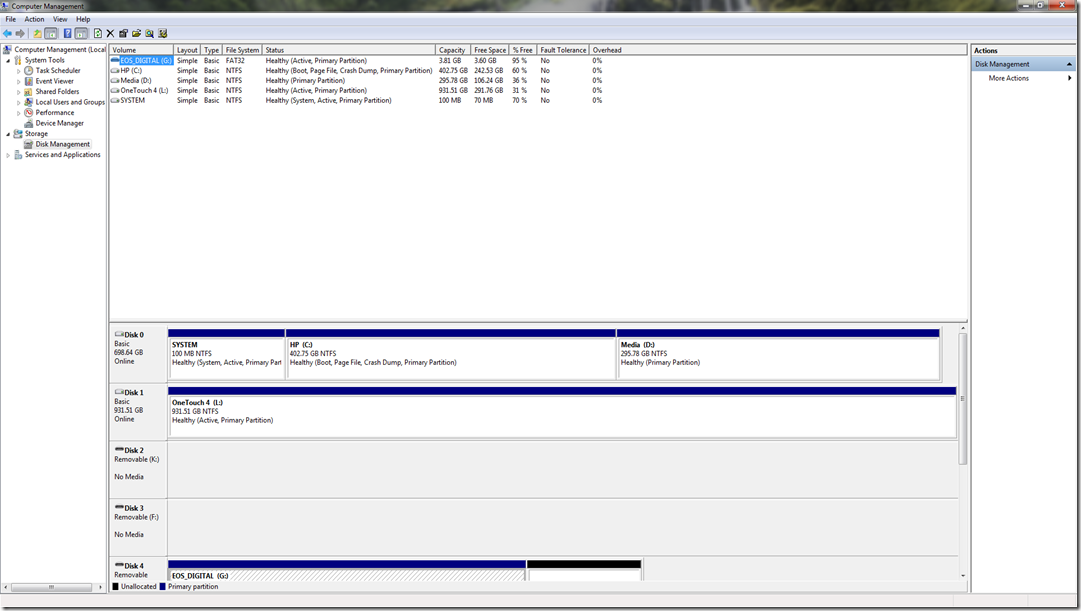

And Line 20 stores the database name. Running the script on my laptop running SQL 2008 gives me results like these

I’ve run this against SQL 2005 and SQL 2008 servers. I haven’t tested it against SQL 2000. As with any code you find on the internet, please test it and become familiar with it before you put it into production.