Are you aware of the default trace that SQL Server runs when it starts? Did you know it’s configurable?

No, you can’t edit it. But you can still run a trace on startup that captures only the events that you want.

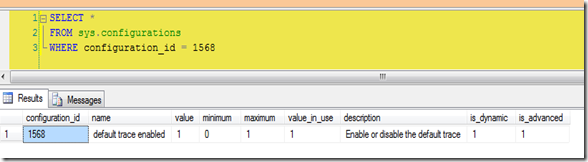

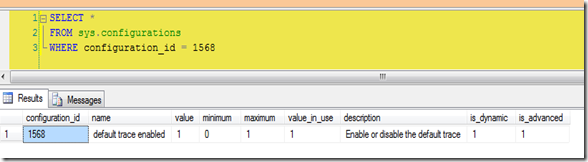

The first thing you should do is to see if the default trace is enabled. Simple enough, just run sp_configure with advanced options and look for 'default trace enabled'. Or query sys.configurations for configuration_id 1568.

SELECT *

FROM sys.configurations

WHERE configuration_id = 1568

On my laptop it is.

There’s a few ways to see what the trace is doing. First find the trace id. It should be 1, but you can make sure…

SELECT * FROM sys.traces

SELECT * FROM ::fn_trace_getinfo(0)

…then query fn_traace_geteventinfo(1) to see what events the default trace is looking at. You can see the descriptions of the events by joining to some system views.

SELECT tc.name AS [Category],

dt.eventid, te.name AS [Event Name],

dt.columnid, tcol.name AS [Column Name]

FROM ::fn_trace_geteventinfo(1) AS dt

INNER JOIN sys.trace_events AS te ON dt.eventid = te.trace_event_id

INNER JOIN sys.trace_columns AS tcol ON dt.columnid = tcol.trace_column_id

INNER JOIN sys.trace_categories AS tc ON te.category_id = tc.category_id

But what if, after looking at the events that the default trace is looking at, you decide to add to the list? Or maybe you want to skip some events? Remember, you can’t alter the default trace.

First disable the default trace. Use sp_configure, the change doesn’t require a restart. It will also close the trace.

sp_configure 'default trace enabled', 0;

go

reconfigure

Next create a stored procedure in the master database that will create and start your trace. Use sp_trace_create to generate a trace id, use sp_trace_setevent to select the events you want to capture, and finally start the trace with sp_trace_setstatus. Read more about creating traces here. If you aren’t familiar with creating traces via T-SQL use SQL Profiler to capture the events you need and set whatever properties you need then extract the trace properties to a script file.

Finally mark your new procedure to start when SQL does by using sp_procoption. This procedure takes 3 parameters; the name of your procedure, the option ‘startup’, and a true or false value. ‘Startup’ is the only option available, true sets to autostart, and false sets to not autostart.

In my demo script below I’ve created a trace that will track what a certain user does in the database as well as when they log in or out.

USE [master]

GO

CREATE PROCEDURE [dbo].[MyDefaultTrace]

AS

begin

declare @rc int

declare @TraceID int

declare @maxfilesize bigint

declare @filecount int

set @maxfilesize = 100

set @filecount = 5

exec @rc = sp_trace_create @TraceID output, 2,

N'C:\Perflogs\MyDefaultTrace',

@maxfilesize, NULL, @filecount

declare @on bit

set @on = 1

exec sp_trace_setevent @TraceID, 14, 7, @on

exec sp_trace_setevent @TraceID, 14, 23, @on

exec sp_trace_setevent @TraceID, 14, 8, @on

exec sp_trace_setevent @TraceID, 14, 64, @on

exec sp_trace_setevent @TraceID, 14, 1, @on

exec sp_trace_setevent @TraceID, 14, 9, @on

exec sp_trace_setevent @TraceID, 14, 21, @on

exec sp_trace_setevent @TraceID, 14, 25, @on

exec sp_trace_setevent @TraceID, 14, 41, @on

exec sp_trace_setevent @TraceID, 14, 49, @on

exec sp_trace_setevent @TraceID, 14, 57, @on

exec sp_trace_setevent @TraceID, 14, 2, @on

exec sp_trace_setevent @TraceID, 14, 6, @on

exec sp_trace_setevent @TraceID, 14, 10, @on

exec sp_trace_setevent @TraceID, 14, 14, @on

exec sp_trace_setevent @TraceID, 14, 26, @on

exec sp_trace_setevent @TraceID, 14, 66, @on

exec sp_trace_setevent @TraceID, 14, 3, @on

exec sp_trace_setevent @TraceID, 14, 11, @on

exec sp_trace_setevent @TraceID, 14, 35, @on

exec sp_trace_setevent @TraceID, 14, 51, @on

exec sp_trace_setevent @TraceID, 14, 12, @on

exec sp_trace_setevent @TraceID, 14, 60, @on

exec sp_trace_setevent @TraceID, 15, 7, @on

exec sp_trace_setevent @TraceID, 15, 15, @on

exec sp_trace_setevent @TraceID, 15, 23, @on

exec sp_trace_setevent @TraceID, 15, 8, @on

exec sp_trace_setevent @TraceID, 15, 16, @on

exec sp_trace_setevent @TraceID, 15, 64, @on

exec sp_trace_setevent @TraceID, 15, 9, @on

exec sp_trace_setevent @TraceID, 15, 17, @on

exec sp_trace_setevent @TraceID, 15, 41, @on

exec sp_trace_setevent @TraceID, 15, 49, @on

exec sp_trace_setevent @TraceID, 15, 57, @on

exec sp_trace_setevent @TraceID, 15, 6, @on

exec sp_trace_setevent @TraceID, 15, 10, @on

exec sp_trace_setevent @TraceID, 15, 14, @on

exec sp_trace_setevent @TraceID, 15, 18, @on

exec sp_trace_setevent @TraceID, 15, 26, @on

exec sp_trace_setevent @TraceID, 15, 66, @on

exec sp_trace_setevent @TraceID, 15, 3, @on

exec sp_trace_setevent @TraceID, 15, 11, @on

exec sp_trace_setevent @TraceID, 15, 35, @on

exec sp_trace_setevent @TraceID, 15, 51, @on

exec sp_trace_setevent @TraceID, 15, 12, @on

exec sp_trace_setevent @TraceID, 15, 60, @on

exec sp_trace_setevent @TraceID, 15, 13, @on

exec sp_trace_setevent @TraceID, 15, 21, @on

exec sp_trace_setevent @TraceID, 43, 7, @on

exec sp_trace_setevent @TraceID, 43, 15, @on

exec sp_trace_setevent @TraceID, 43, 8, @on

exec sp_trace_setevent @TraceID, 43, 64, @on

exec sp_trace_setevent @TraceID, 43, 1, @on

exec sp_trace_setevent @TraceID, 43, 9, @on

exec sp_trace_setevent @TraceID, 43, 41, @on

exec sp_trace_setevent @TraceID, 43, 2, @on

exec sp_trace_setevent @TraceID, 43, 10, @on

exec sp_trace_setevent @TraceID, 43, 3, @on

exec sp_trace_setevent @TraceID, 43, 11, @on

exec sp_trace_setevent @TraceID, 43, 35, @on

exec sp_trace_setevent @TraceID, 43, 51, @on

exec sp_trace_setevent @TraceID, 43, 12, @on

exec sp_trace_setevent @TraceID, 43, 5, @on

exec sp_trace_setevent @TraceID, 43, 13, @on

exec sp_trace_setevent @TraceID, 43, 6, @on

exec sp_trace_setevent @TraceID, 43, 14, @on

exec sp_trace_setevent @TraceID, 43, 62, @on

exec sp_trace_setevent @TraceID, 45, 7, @on

exec sp_trace_setevent @TraceID, 45, 8, @on

exec sp_trace_setevent @TraceID, 45, 64, @on

exec sp_trace_setevent @TraceID, 45, 1, @on

exec sp_trace_setevent @TraceID, 45, 41, @on

exec sp_trace_setevent @TraceID, 45, 10, @on

exec sp_trace_setevent @TraceID, 45, 3, @on

exec sp_trace_setevent @TraceID, 45, 11, @on

exec sp_trace_setevent @TraceID, 45, 35, @on

exec sp_trace_setevent @TraceID, 45, 51, @on

exec sp_trace_setevent @TraceID, 45, 12, @on

exec sp_trace_setevent @TraceID, 45, 5, @on

exec sp_trace_setevent @TraceID, 45, 13, @on

exec sp_trace_setevent @TraceID, 45, 6, @on

exec sp_trace_setevent @TraceID, 45, 14, @on

exec sp_trace_setevent @TraceID, 45, 62, @on

exec sp_trace_setevent @TraceID, 45, 15, @on

exec sp_trace_setevent @TraceID, 41, 7, @on

exec sp_trace_setevent @TraceID, 41, 15, @on

exec sp_trace_setevent @TraceID, 41, 8, @on

exec sp_trace_setevent @TraceID, 41, 64, @on

exec sp_trace_setevent @TraceID, 41, 1, @on

exec sp_trace_setevent @TraceID, 41, 41, @on

exec sp_trace_setevent @TraceID, 41, 10, @on

exec sp_trace_setevent @TraceID, 41, 3, @on

exec sp_trace_setevent @TraceID, 41, 11, @on

exec sp_trace_setevent @TraceID, 41, 35, @on

exec sp_trace_setevent @TraceID, 41, 51, @on

exec sp_trace_setevent @TraceID, 41, 12, @on

exec sp_trace_setevent @TraceID, 41, 5, @on

exec sp_trace_setevent @TraceID, 41, 13, @on

exec sp_trace_setevent @TraceID, 41, 6, @on

exec sp_trace_setevent @TraceID, 41, 14, @on

-- Set the Filters

declare @intfilter int

declare @bigintfilter bigint

exec sp_trace_setfilter @TraceID, 11, 0, 6, N'BadUser'

exec sp_trace_setstatus @TraceID, 1

end

GO

EXEC sp_procoption N'MyDefaultTrace', 'startup', '1'

GO

Now when I check to see what traces are running trace id #1 is the trace created in my procedure. The trace start is also written to the SQL error log when the master database starts.

As always, please test this on a server you can play with before putting into production.